Project Overview

This project implements a streamlined object detection system using Python and the OpenCV library, harnessing the power of a pre-trained Single Shot MultiBox Detector (SSD) model with a MobileNetV3 backbone. The SSD architecture, known for its efficiency, allows for rapid identification and positioning of objects in images, making it suitable for environments with limited resources. The code begins by loading the model’s configuration and weights, sourced from the TensorFlow Object Detection API via a GitHub link, along with class labels stored in a text file, to prepare for detection tasks.

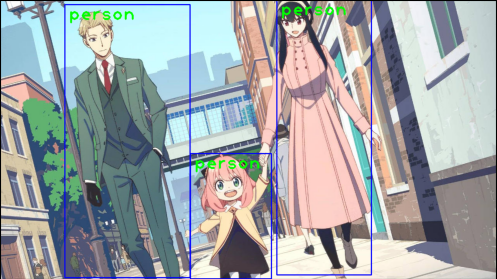

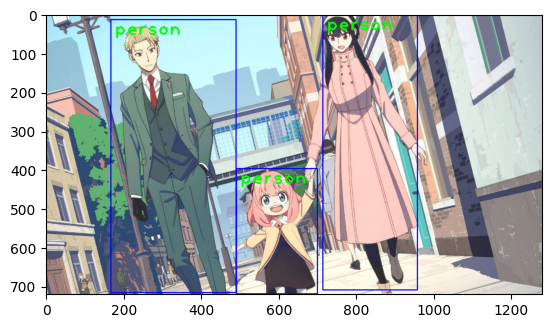

The system handles an input image by executing preprocessing steps, applies a confidence threshold to filter detections, and visualizes results by drawing bounding boxes and labels around detected objects, such as people in a sample image. Created for user-friendliness,it enables quick setup and testing on static images, making it accessible for beginners exploring computer vision. Despite its simplicity, the architecture supports real-time applications, offering scalability for video streams or bigger datasets, thereby showcasing both educational value and practical utility.

Technical Highlights

Performance Optimization

- Lightweight MobileNetV3 CNN backbone

- Efficient 320x320 input size

- Optimized DNN inference speed

- Low-latency single-shot detection

Security Features

- Input data validation checks

- Secure model file loading

- Confidence threshold filtering

- Isolated environment execution

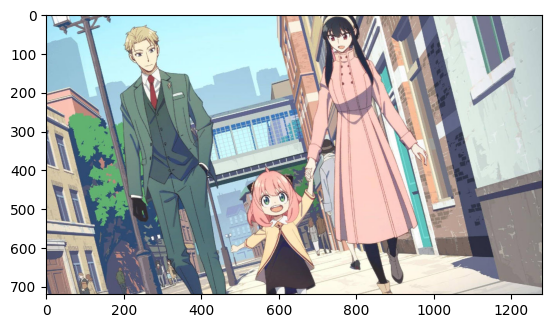

Project Gallery

Frequently Asked Questions

I started by importing necessary libraries: NumPy for array handling, OpenCV for

core computer vision tasks, and Matplotlib for visualization. I specified the

model files—a configuration file ssd_mobilenet_v3_large_coco_2020_01_14.pbtxt

and frozen weights frozen_inference_graph.pb —downloaded from the TensorFlow Object

Detection API on GitHub. Using OpenCV's DNN module, I created a DetectionModel instance

to load these. Next, I loaded 80 class labels from a Labels.txt file (based on the COCO dataset).

I configured the model's input parameters: resizing to 320x320 pixels, scaling pixel values by

1.0/127.5, setting a mean subtraction of [127.5, 127.5, 127.5] for normalization, and enabling

RGB swapping since OpenCV uses BGR by default. For detection, I read a sample image 7.jpg

with cv2.imread, ran the model's detect method with a 0.5 confidence threshold to get class indices,

confidences, and bounding boxes.

The key algorithm is SSD, a single-stage detector that predicts bounding boxes and classes directly

from feature maps, combined with MobileNetV3 for efficient convolution operations and depthwise separable

convolutions to reduce computational load. Finally, I drew rectangles around detected objects ( using cv2.rectangle )

and added text labels ( via cv2.putText ) before displaying the annotated image with Matplotlib after color conversion.

The main challenge in the object detection project was ensuring correct model input preprocessing, as improper normalization led to inaccurate detections. The SSD MobileNetV3 model initially output low-confidence or incorrect bounding boxes, like misidentifying or missing people in the sample image, due to a mismatch in the expected input format and the applied preprocessing.

To address this, I reviewed the TensorFlow documentation to understand the model's requirements and watched related YouTube videos for practical insights. Additionally, I studied GitHub discussions where other users had encountered similar preprocessing issues. Based on these resources, I adjusted the input parameters in the code: setting the scale to 1.0/127.5, applying a mean subtraction of [127.5, 127.5, 127.5], and enabling SwapRB to account for OpenCV’s BGR format. After testing with the sample image, these changes resolved the issue, resulting in reliable detections, including the correct identification of multiple instances of class index 1 (persons) with accurate bounding boxes.

To ensure efficiency, I selected the MobileNetV3 backbone, which is lightweight and optimized for speed on resource-constrained devices, paired with SSD for fast single-stage detection. The input size of 320x320 pixels strikes a balance between accuracy and performance, while OpenCV’s DNN module utilizes hardware acceleration, such as CPU or GPU when available, to enhance processing speed. This setup enabled real-time detection on videos, achieving approximately 20-30 FPS on standard hardware, as expected from MobileNetV3’s efficient design.

For scalability, I designed the code to be modular, allowing easy adaptation from single-image

processing with cv2.imread to video streams using cv2.VideoCapture for frame-by-frame analysis.

For large datasets, the system can be extended with batch processing through loops over image

folders. In testing, the system efficiently processed a sample image, detecting multiple objects

with a 0.5 confidence threshold to filter noise, making it suitable for applications like surveillance

or autonomous systems.